The weekend and holidays should be excluded. It is easy to exclude weekends, but the holiday are specific to each place and should be stored in an additional table. I store the holidays in a table HOLIDAYS_VD:

Select the time interval between two dates

The SQL query is adapted from here.

select count(*) from (

-- select * from (

select * from (

select dayinbetween, to_char( dayinbetween, 'DY' ) weekday from (

select startday+level-1 dayinbetween from (

select startday ,endday-startday diffdays from (

select to_date('01.02.2000','DD.MM.YYYY') endday, to_date('29.02.2000','DD.MM.YYYY') startday from dual

)

) connect by level <= diffdays+1

)

)where weekday not in ( 'SAT', 'SUN' )--all not weeknds in between

) a

left join holidays_vd h on a.dayinbetween=h.day

where h.day is null

order by dayinbetween;

It is almost right, but it does not return 0 is the two dates are the same. I did not corrected it and abandoned it because I needed a PL/SQL function. It is below, it is based on the query above and it seems to work fine.

PL/SQL function subtracting dates and exluding weekends and holidays

create or replace PACKAGE indicators AS TYPE DateListType IS TABLE OF DATE; function subtract_business_dates( startdate DATE, enddate DATE) return integer; END ;

create or replace PACKAGE BODY indicators AS

function subtract_business_dates(

startdate DATE,

enddate DATE) return integer

IS

result INTEGER:=1;

dateList DateListType:=DateListType();

difference_days INTEGER;

BEGIN

difference_days:=trunc(enddate)-trunc(startdate);

if difference_days<0 then

RAISE_APPLICATION_ERROR(-20000, 'The first day is after the second date');

end if;

if difference_days=0 then

return 0;

end if;

dateList.extend(difference_days);

FOR i IN dateList.first..dateList.last

LOOP

dateList(i):=startdate+i;

END LOOP;

select count(*) into result from (

select * from (

select dayinbetween, to_char( dayinbetween, 'DY' ) weekday from (

select COLUMN_VALUE dayinbetween from (table(dateList))

)

)where weekday not in ( 'SAT', 'SUN' )--all not weeknds in between

) a

left join holidays_vd h on a.dayinbetween=h.day

where h.day is null

;

return result;

END;

END ;

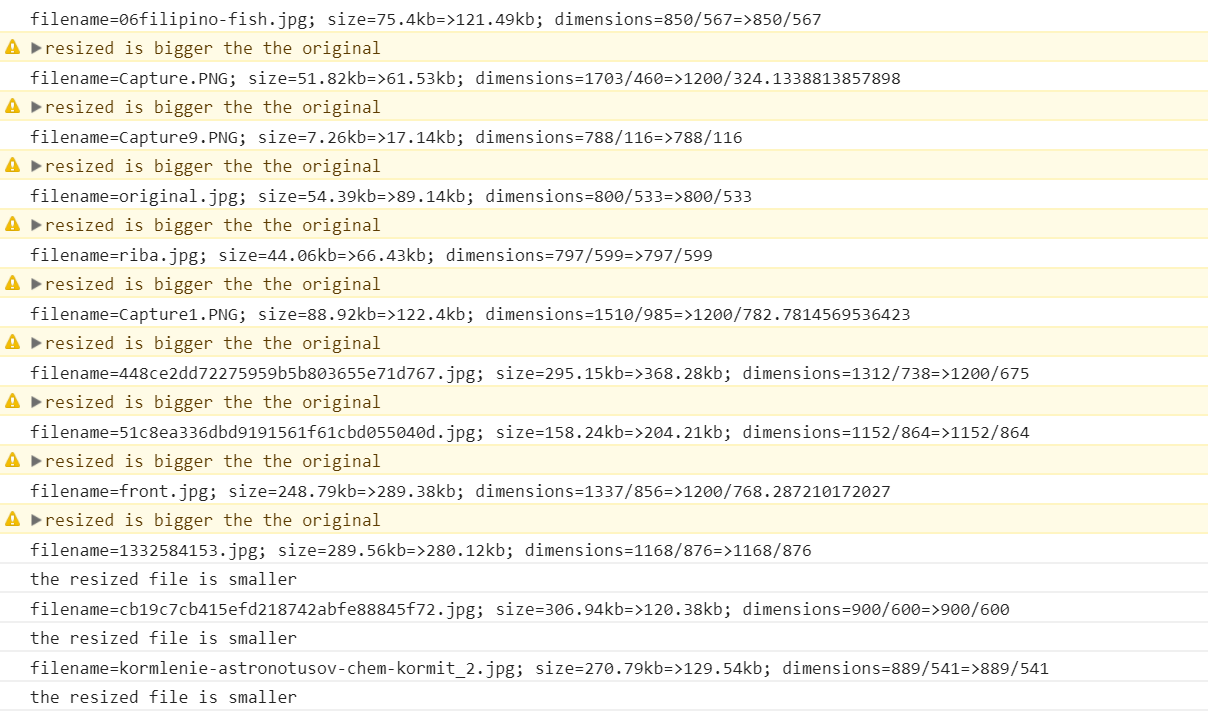

Some tests to make sure that the function works well. Note, the table with holidays includes a test date 14.02.2000.

select indicators.subtract_business_dates(to_date('01.02.2000','DD.MM.YYYY'),to_date('29.02.2000','DD.MM.YYYY')) from dual;

select indicators.subtract_business_dates(to_date('04.02.2000','DD.MM.YYYY'),to_date('01.02.2000','DD.MM.YYYY')) from dual; --exception

select indicators.subtract_business_dates(to_date('03.02.2000','DD.MM.YYYY'),to_date('03.02.2000','DD.MM.YYYY')) from dual;--thu

select indicators.subtract_business_dates(to_date('03.02.2000','DD.MM.YYYY'),to_date('04.02.2000','DD.MM.YYYY')) from dual;--fri

select indicators.subtract_business_dates(to_date('03.02.2000','DD.MM.YYYY'),to_date('05.02.2000','DD.MM.YYYY')) from dual;--sat

select indicators.subtract_business_dates(to_date('03.02.2000','DD.MM.YYYY'),to_date('06.02.2000','DD.MM.YYYY')) from dual;--sun

select indicators.subtract_business_dates(to_date('03.02.2000','DD.MM.YYYY'),to_date('07.02.2000','DD.MM.YYYY')) from dual;--mon

select indicators.subtract_business_dates(to_date('03.02.2000','DD.MM.YYYY'),to_date('08.02.2000','DD.MM.YYYY')) from dual;--tue

select indicators.subtract_business_dates(to_date('04.02.2000','DD.MM.YYYY'),to_date('04.02.2000','DD.MM.YYYY')) from dual;--fri

select indicators.subtract_business_dates(to_date('04.02.2000','DD.MM.YYYY'),to_date('05.02.2000','DD.MM.YYYY')) from dual;--sat

select indicators.subtract_business_dates(to_date('04.02.2000','DD.MM.YYYY'),to_date('06.02.2000','DD.MM.YYYY')) from dual;--sun

select indicators.subtract_business_dates(to_date('04.02.2000','DD.MM.YYYY'),to_date('07.02.2000','DD.MM.YYYY')) from dual;--mon

select indicators.subtract_business_dates(to_date('04.02.2000','DD.MM.YYYY'),to_date('08.02.2000','DD.MM.YYYY')) from dual;--tue

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('10.02.2000','DD.MM.YYYY')) from dual;--thu

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('11.02.2000','DD.MM.YYYY')) from dual;--fri

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('12.02.2000','DD.MM.YYYY')) from dual;--sat

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('13.02.2000','DD.MM.YYYY')) from dual;--sun

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('14.02.2000','DD.MM.YYYY')) from dual;--mon holiday

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('15.02.2000','DD.MM.YYYY')) from dual;--tue

select indicators.subtract_business_dates(to_date('10.02.2000','DD.MM.YYYY'),to_date('16.02.2000','DD.MM.YYYY')) from dual;--wed